This post is about some changes recently made to

Rust's

ErrorKind, which aims to categorise OS errors

in a portable way.

Audiences for this post

- The educated general reader interested in a case study involving

error handling, stability, API design, and/or Rust.

- Rust users who have tripped over these changes.

If this is you, you can cut to the chase and skip to

How to fix.

Background and context

Error handling principles

Handling different errors differently is often important (although,

sadly, often neglected). For example, if a program tries to read

its default configuration file, and gets a "file not found" error,

it can proceed with its default configuration, knowing that the user

hasn't provided a specific config.

If it gets some other error, it should probably complain and quit,

printing the message from the error (and the filename). Otherwise,

if the network fileserver is down (say), the program might

erroneously run with the default configuration and do something

entirely wrong.

Rust's portability aims

The Rust programming language tries to make it straightforward to

write portable code. Portable error handling is always a bit

tricky. One of Rust's facilities in this area is

std::io::ErrorKind

which is an enum which tries to categorise (and, sometimes,

enumerate) OS errors. The idea is that a program can check the

error kind, and handle the error accordingly.

That these

ErrorKinds are part of the Rust standard

library means that to get this right, you don't need to delve down

and get the actual underlying operating system error number, and

write separate code for each platform you want to support. You can

check whether the error is

ErrorKind::NotFound

(or whatever).

Because

ErrorKind is so important in many Rust APIs,

some code which isn't really doing an OS call can still have to

provide an

ErrorKind. For this purpose, Rust provides

a special category

ErrorKind::Other, which doesn't

correspond to any particular OS error.

Rust's stability aims and approach

Another thing Rust tries to do is keep existing code working.

More specifically, Rust tries to:

- Avoid making changes which would contradict the

previously-published documentation of Rust's language and

features.

- Tell you if you accidentally rely on properties which

are not part of the published documentation.

By and large, this has been very successful. It means

that if you write code now, and it compiles and runs cleanly, it is

quite likely that it will continue work properly in the future, even

as the language and ecosystem evolves.

This blog post is about a case where Rust failed to do (2), above,

and, sadly, it turned out that several people had accidentally

relied on something the Rust project definitely intended to change.

Furthermore, it was something which

needed to change.

And the new (corrected) way of using the API is not so obvious.

Rust enums, as relevant to

io::ErrorKind

(Very briefly:)

When you have a value which is an

io::ErrorKind, you

can compare it with specific values:

if error.kind() == ErrorKind::NotFound ...

But in Rust it's more usual to write something like this

(which you can read like a

switch statement):

match error.kind()

ErrorKind::NotFound => use_default_configuration(),

_ => panic!("could not read config file : ", &file, &error),

Here

_ means "anything else".

Rust insists that

match statements are

exhaustive, meaning that each one covers all the possibilities.

So if you left out the line with the

_, it wouldn't

compile.

Rust enums can also be marked

non_exhaustive, which is

a declaration by the API designer that they plan to add more kinds.

This has been done for

ErrorKind, so the

_

is mandatory, even if you write out all the possibilities that exist

right now: this ensures that if new

ErrorKinds appear,

they won't stop your code compiling.

Improving the error categorisation

The set of error categories stabilised in Rust 1.0 was too small.

It missed many important kinds of error. This makes writing

error-handling code awkward. In any case, we expect to add new

error categories occasionally. I set about trying to improve this

by

proposing

new ErrorKinds. This obviously needed considerable

community review, which is why it took about 9 months.

The trouble with

Other and tests

Rust has to assign an

ErrorKind to

every OS error,

even ones it doesn't really know about.

Until recently, it mapped all errors it didn't understand to

ErrorKind::Other - reusing the category for "not an OS

error at all".

Serious people who write serious code like to have serious tests.

In particular, testing error conditions is really important. For

example, you might want to test your program's handling of disk

full, to make sure it didn't crash, or corrupt files. You would set

up some contraption that would simulate a full disk. And then, in

your tests, you might check that the error was correct.

But until very recently (still now, in Stable Rust), there was

no

ErrorKind::StorageFull. You would

get

ErrorKind::Other. If you were diligent you would

dig out the OS error code (and check for

ENOSPC on

Unix, corresponding Windows errors, etc.). But that's tiresome.

The more obvious thing to do is to check that the kind

is

Other.

Obvious but wrong.

ErrorKind is

non_exhaustive,

implying that more error kinds will appears, and, naturally, these

would more finely categorise previously-

Other OS errors.

Unfortunately, the documentation note

Errors that are Other now may move to a different or a new

ErrorKind variant in the future.

was only added in May 2020.

So the wrongness of the "obvious" approach was, itself, not very

obvious.

And even with that docs note, there was no compiler warning or anything.

The unfortunate result is that there is a body of code out there

in the world which might break any time an error that was

previously

Other becomes properly categorised.

Furthermore, there was nothing stopping new people writing new

obvious-but-wrong code.

Chosen solution:

Uncategorized

The Rust developers wanted an engineered safeguard against the bug of

assuming that a particular error shows up as

Other.

They

chose the following solution:

There is now a new

ErrorKind::Uncategorized which is

now used for all OS errors for which there isn't a more specific

categorisation. The fallback translation of unknown errors was

changed from

Other to

Uncategorised.

This is de jure justified by the fact that this enum has always been

marked

non_exhaustive. But in practice because this

bug wasn't previously detected, there is such code in the wild.

That code now breaks (usually, in the form of failing test cases).

Usually when Rust starts to detect a particular programming error,

it is reported as a new warning, which doesn't break anything. But

that's not possible here, because this is a behavioural change.

The new

ErrorKind::Uncategorized is

marked

unstable. This makes it impossible to write

code on Stable Rust which insists that an error comes out

as

Uncategorized. So, one cannot now write code that

will break when new

ErrorKinds are added.

That's the intended effect.

The downside is that this does break old code, and, worse,

it is not as clear as it should be what the fixed code looks like.

Alternatives considered and rejected by the Rust developers

Not adding more

ErrorKinds

This was not tenable. The existing set is already too small, and

error categorisation is in any case expected to improve over time.

Just adding

ErrorKinds as had been done before

This would mean occasionally breaking test cases (or, possibly,

production code) when an error that was

previously

Other becomes categorised. The broken code

would have been "obvious", but de jure wrong, just as it is now,

So this option amounts to expecting this broken code to continue

to be written and continuing to break it occasionally.

Somehow using Rust's Edition system

The Rust language has a system to allow language evolution,

where code declares its Edition (2015, 2018, 2021).

Code from multiple editions can be combined, so that

the ecosystem can upgrade gradually.

It's not clear how this could be used for

ErrorKind, though.

Errors have to be passed between code with different editions.

If those different editions had different categorisations, the

resulting programs would have incoherent and broken error handling.

Also some of the schemes for making this change would mean that new

ErrorKinds could only be stabilised about once every 3

years, which is far too slow.

How to fix code broken by this change

Most main-line error handling code already has a fallback case for

unknown errors. Simply replacing any occurrence

of

Other with

_ is right.

How to fix thorough tests

The tricky problem is tests. Typically, a thorough test case wants

to check that the error is "precisely as expected" (as far as the

test can tell). Now that unknown errors come out as an

unstable

Uncategorized variant that's not so easy. If

the test is expecting an error that is currently not categorised,

you want to write code that says "if the error is any of the

recognised kinds, call it a test failure".

What does "any of the recognised kinds" mean here ? It doesn't

meany any of the kinds recognised

by the version of the Rust

stdlib that is actually in use. That set might get bigger.

When the test is compiled and run later, perhaps years later, the

error in this test case might indeed be categorised. What you

actually mean is "the error must not be any of the kinds

which

existed when the test was written".

IMO therefore the right solution for such a test case is to

cut and

paste the current list of stable

ErrorKinds into your

code. This will seem wrong at first glance, because the list in

your code and in Rust can get out of step. But when they do get out

of step

you want your version, not the stdlib's. So freezing the

list at a point in time is precisely right.

You probably only want to maintain one copy of this list, so put it

somewhere central in your codebase's test support machinery.

Periodically, you can update the list deliberately - and fix any

resulting test failures.

Unfortunately this approach is not suggested by the documentation.

In theory you could work all this out yourself from first

principles, given even the situation prior to May 2020, but it seems

unlikely that many people have done so. In particular, cutting and

pasting the list of recognised errors would seem very unnatural.

Conclusions

This was not an easy problem to solve well.

I think Rust has done a plausible job given the various constraints,

and the result is technically good.

It is a shame that this change to make the error handling stability

more correct caused the most trouble for the most careful people who

write the most thorough tests.

I also

think the docs could be improved.

edited shortly after posting, and again 2021-09-22 16:11 UTC, to fix HTML slips

comments

As a follow on to a previous blog entry of mine,

As a follow on to a previous blog entry of mine,  Debian Reunion Hamburg 2021

Moin!

I'm glad to finally be able to send out this invitation for the "Debian Reunion

Hamburg 2021" taking place at the venue of the 2018 & 2019 MiniDebConfs!

The event will run from Monday, Sep 27 2021 until Friday Oct 1 2021, with

Sunday, Sep 26 2021 as arrival day. IOW, Debian people meet again in Hamburg.

The exact format is less defined and structured than previous years, probably

we will just be hacking from Monday to Wednesday, have talks on Thursday and

a nice day trip on Friday.

Debian Reunion Hamburg 2021

Moin!

I'm glad to finally be able to send out this invitation for the "Debian Reunion

Hamburg 2021" taking place at the venue of the 2018 & 2019 MiniDebConfs!

The event will run from Monday, Sep 27 2021 until Friday Oct 1 2021, with

Sunday, Sep 26 2021 as arrival day. IOW, Debian people meet again in Hamburg.

The exact format is less defined and structured than previous years, probably

we will just be hacking from Monday to Wednesday, have talks on Thursday and

a nice day trip on Friday.

Sad but true, and

at least something for some people. We should all do more local events. And

more online events too, eg I think this is a great idea too:

Sad but true, and

at least something for some people. We should all do more local events. And

more online events too, eg I think this is a great idea too:

This is not only the Year of the Ox, but also the year of Debian 11, code-named

bullseye. The release lies ahead,

This is not only the Year of the Ox, but also the year of Debian 11, code-named

bullseye. The release lies ahead,

The Hindu also

The Hindu also  Prerequisites

You will need the following prerequisites :

Prerequisites

You will need the following prerequisites :

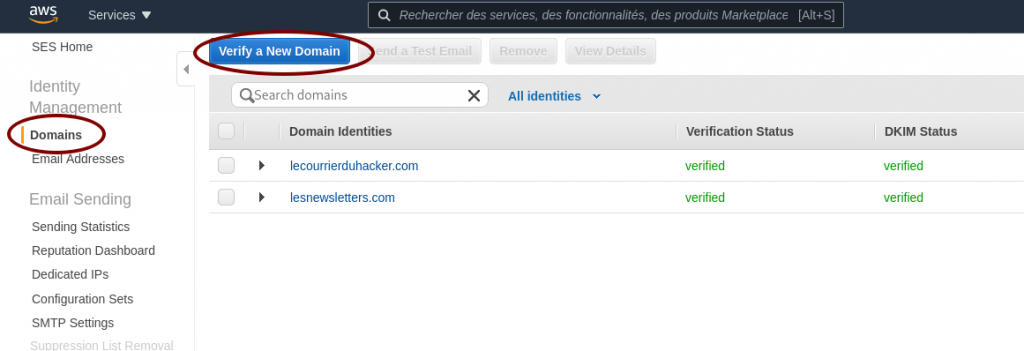

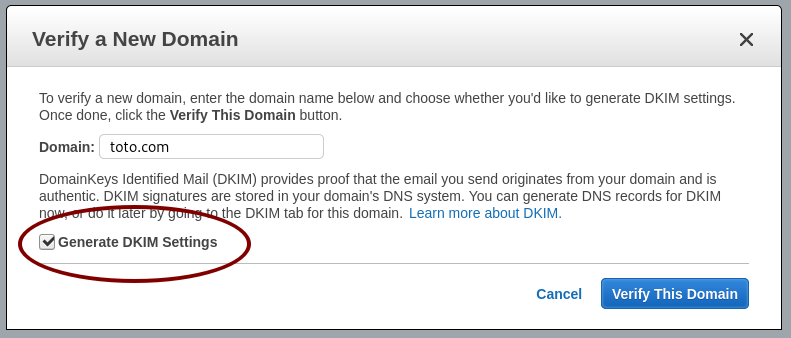

Ask AWS SES to verify a domain

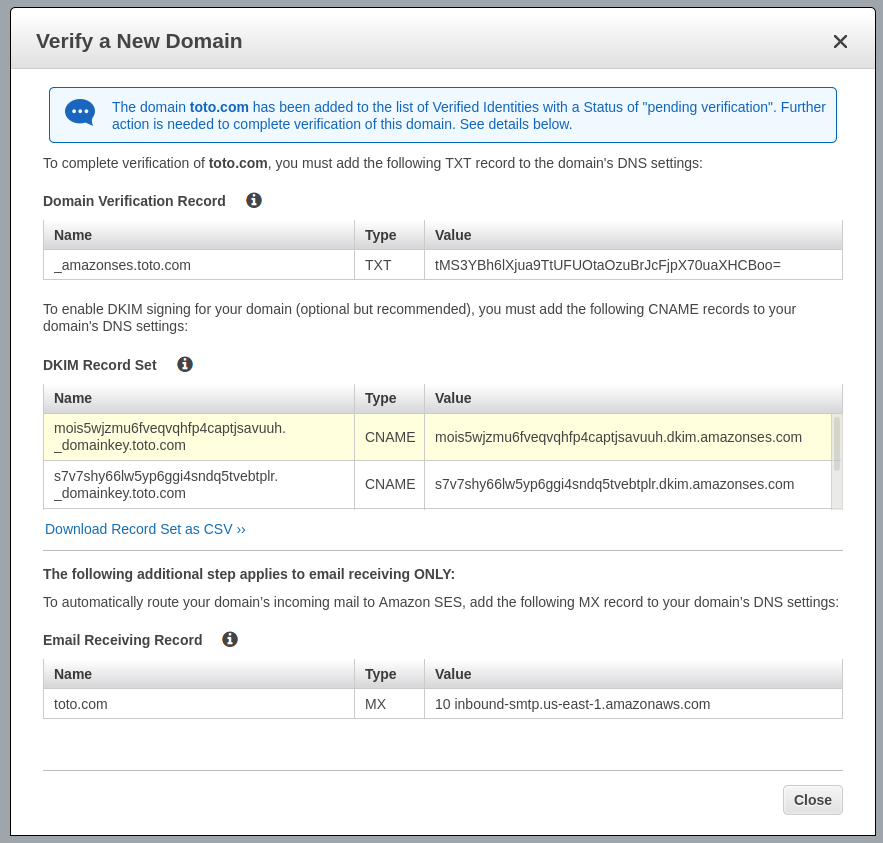

Ask AWS SES to verify a domain Generate the DKIM settings

Generate the DKIM settings

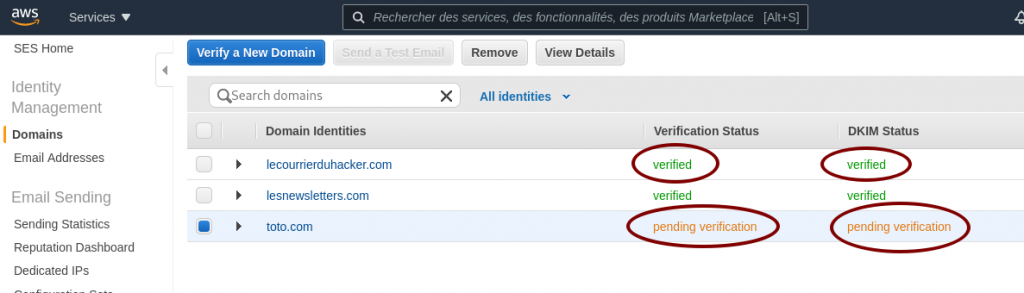

AWS SES pending verification

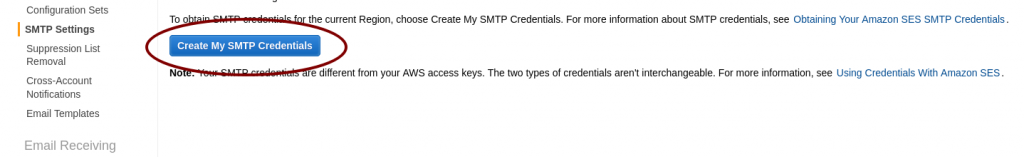

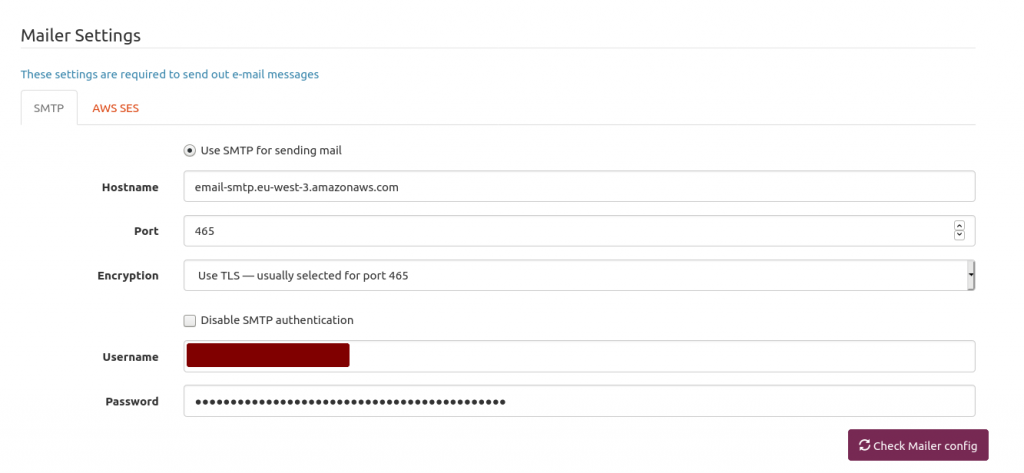

AWS SES pending verification AWS SES SMTP settings and credentials

AWS SES SMTP settings and credentials Mailtrain mailer setup

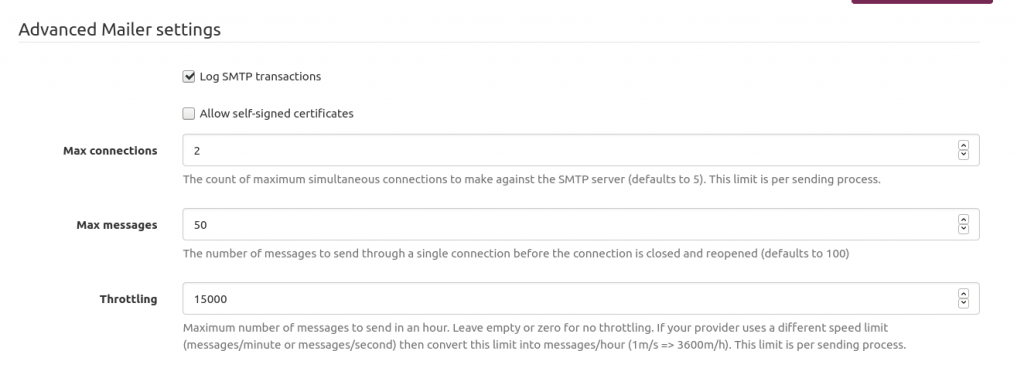

Mailtrain mailer setup Mailtrain to throttle sending emails to AWS SES

Mailtrain to throttle sending emails to AWS SES Debian has work-in-progress packages for Kubernetes, which work well enough enough for a testing and learning environement. Bootstraping a cluster with the

Debian has work-in-progress packages for Kubernetes, which work well enough enough for a testing and learning environement. Bootstraping a cluster with the  This article documents how to install

This article documents how to install